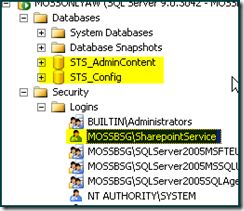

We have a lots of projects that are virtualized. When we finish with a project it is common practice to de-hydrate the servers and put them away. For one of our customer's project, we followed this process over a year ago. Lots of things have changed in the past year. First, we moved from one building to a different building. On the process, we added new servers, clean up some old AD's, change OS's (Windows 2008 DataCenter... oh yeah.!), etc...

Now trying to hydrate back online this one project's environment with all of the infrastructure changed was a challenge. For this enterprise application we have Oracle, App Servers, Web Servers, Test Servers, etc.

Out of all of the VHD's that we needed to bring back, only 2 were recognized by Hyper-V.! Doing a check on the rest of those VHD's gave me the file or directory is corrupted and readable message... :(

This is how to check for integrity using the Hyper-V. Add the vhd file to the IDE controller and then hit the Inspect button.

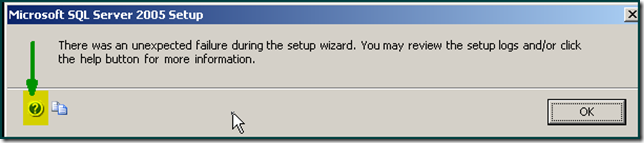

[Main Instruction]

An error occurred when attempting to retrieve the virtual hard disk "F:\hydrate\Project1-Agent.vhd" on server XXX.[Content]

The file or directory is corrupted and unreadable.

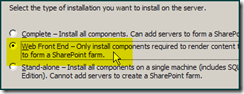

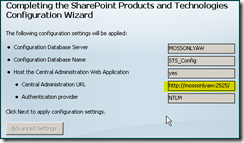

At this point, if we try to recreate all of the settings/configurations stored on those servers will take days.! Some entries in the TechNet forums mentioned that they got it working by re-opening the files on VirtualPC. This is the way I got my corrupted vhd's to work:

First, I create a new Virtual Machine with a new HD. Then went to Settings|Hard Disk 1 and loaded this corrupted vhd file.

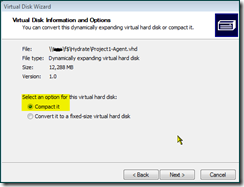

There you will find the Virtual Disk Wizard. Click on it, go through the prompts and select the option to Edit an existing virtual disk.

Then choose to Compact it. The reason for this, is that if you select the Convert it to a fixed-size virtual hard disk, the wizard will make the new hard disk EXACTLY the same size of what content is written at the moment in it. Which means, if you have allocated 20GB to this disk initially, and have used 12GB of it. Now instead of having a 20GB with 8GB free, you ended with a fixed 12GB hard drive with NO SPACE LEFT on it.

On the next screen, I save the file under a different name for precaution. Now click next and wait. It will take about 1hr for a 28GB file. Once this process completed, I copied the new file back to our Windows 2008 server, load it on Hyper-V and it was recognized.!

Success.